Scaling RAG Systems in Financial Organizations Artificial intelligence has emerged...

Read MoreHow to Measure RAG from Accuracy to Relevance?

Table of Contents

ToggleRetrieval-Augmented Generation (RAG) is a powerful technique that enhances AI-generated responses by pulling relevant context from an external knowledge base, typically stored in a vector database.

However, building and effectively evaluating a RAG system can be a challenging endeavor. The true power of RAG lies not just in its architecture but in its performance across multiple stages of retrieval and generation. To ensure the system is delivering high-quality output, a robust evaluation framework is essential.

In this article, we’ll dive into the core metrics needed to evaluate every stage of your RAG pipeline, from the retrieval process to the generation output.

Understanding Retrieval-Augmented Generation (RAG)

The technique known as Retrieval-Augmented Generation (RAG) builds on the advantages of retrieval-based methods and generative models by using large language models llms , two popular AI approaches.

To extract pertinent details and context, RAG searches outside sources of data, frequently kept in a vector database, as opposed to merely producing answers based on what the model has learnt. Especially for tasks requiring current or comprehensive information, this greatly improves the generated answers’ level of specificity and knowledge.

RAG enables systems to perform tasks beyond the scope of conventional pre-trained knowledge, enabling them to offer accurate and contextually rich answers to queries or insights.

This makes it especially helpful in situations where the model needs to retrieve specific or real-time information in order to generate a response, such as in customer service, research, or other related fields. RAG essentially fills in the gap between creating content and making sure it is supported by accurate data.

The Importance of RAG Evaluation

Assessing Retrieval-Augmented Generation (RAG) systems is essential as it aids in guaranteeing that the model generates outputs that are precise, pertinent, and of high quality.

RAG systems rely on outside data sources to provide answers, but it is impossible to ensure that this data is being used appropriately unless it is properly evaluated. Assessment aids in determining whether the system is retrieving the appropriate context and whether the generated responses make sense and are applicable to the current task.

Evaluation metrics can highlight issues where the model is retrieving irrelevant data or producing confusing answers, which can help identify potential weaknesses or areas for improvement in the RAG system. You can use this feedback to make changes to the system, such as enhancing the generative model or the retrieval procedure, or both. Eventually, the key to maintaining a RAG system’s accuracy, efficacy, and efficiency over time is thorough and frequent evaluation.

What Are a RAG System's Essential Elements?

Embedding Models

Embedding models, which transform raw text into dense vector representations that let computers to understand and manipulate word and phrase meaning, are a crucial component of RAG systems. Quick generation and retrieval of relevant information is made possible by these embeddings, which capture the semantic relationships between words. It is essential to select an embedding model that is suitable for the specific task at hand.

Retrievers Systems

In a RAG system, the retriever is the first step in the RAG pipeline and is responsible for locating and obtaining relevant information from a vast amount of data. Sparse retrieval and dense retrieval are the two main methods that retrievers often use. Sparse retrieval, like BM25, is based on keyword matching and is ideal for large document searches when speed is a major consideration.

Generators

The generator in a RAG system is responsible for converting the relevant information that the retriever has acquired into text that is grammatically correct, appropriate for the context, and legible by humans. A powerful generator is necessary when dealing with complex queries or large amounts of data in order to ensure that the RAG system produces results that appear authentic.

Essential KPIs for RAG Frameworks

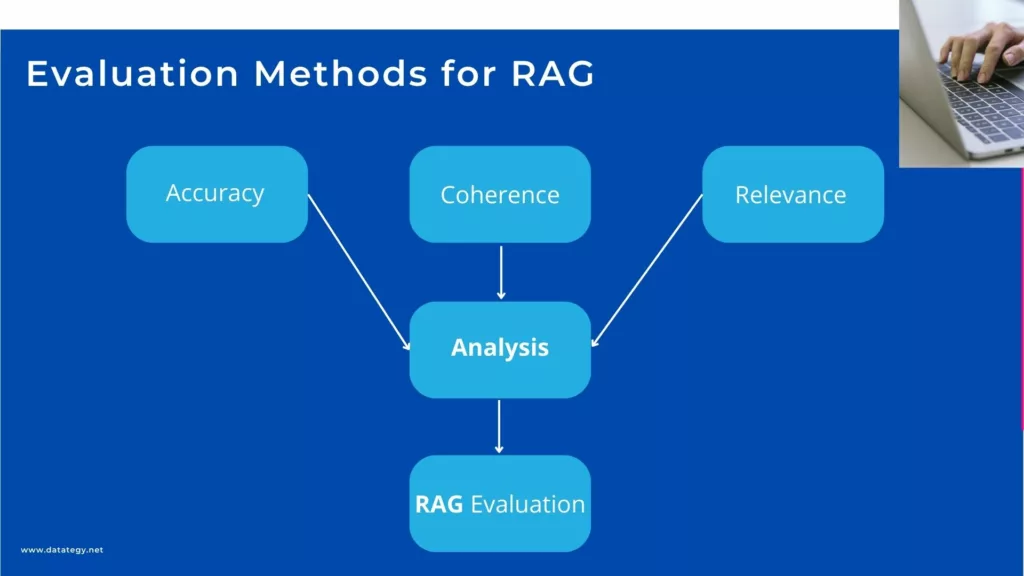

Accuracy

In Retrieval-Augmented Generation (RAG) models, accuracy describes how well the system finds and applies pertinent data to produce an accurate and precise output.

Since RAG systems integrate text generation and external data retrieval, accuracy refers to how well the system extracts pertinent information from its database and applies it to appropriately answer queries or accomplish tasks. Inaccurate or improperly used information from the retrieval process reduces the overall accuracy of the response.

Testing the frequency of correct responses from the system from both a retrieval and a generation perspective is part of assessing accuracy in RAG models. This is frequently accomplished by either utilising human judgement to determine whether the response makes sense or by comparing the system’s output to a known “correct” answer.

Developers can determine whether the model is producing text that accurately reflects the facts it has discovered and whether it is giving the correct information by using accuracy metrics.

Coherence

Ensuring that the generated responses in Retrieval-Augmented Generation (RAG) models make sense and flow naturally is the essence of coherence. A RAG system must present the information in a comprehensible manner even if it retrieves accurate data.

When a response is coherent, its various components flow naturally together, making it both accurate and simple to understand. Even with the correct facts, an output lacking coherence may come across as jumbled or unclear.

Assessing coherence is crucial because it influences users’ perceptions of the RAG system’s quality. Even if the data is correct, users may become dissatisfied with the system or lose faith in it if they perceive the response to be disjointed or badly organised. In addition to ensuring that the model’s outputs are technically accurate, coherence also makes them easy to interact with and use.

Relevance

Whether the response directly addresses the user’s query or task is the key to assessing relevance in generated content. Relevance in Retrieval-Augmented Generation (RAG) systems guarantees that the data being generated and retrieved is accurate and suitable for the given context.

When a user enquires about the weather in Paris, for instance, a pertinent response should address the weather rather than irrelevant details like the history of the city. Ensuring the system delivers meaningful responses relies heavily on relevance.

RAG systems are frequently tested with human evaluators or automated metrics that compare the output to predefined correct responses in order to determine relevance. This makes it easier to assess how closely the content that was generated adheres to the original task or question.

A response that frequently contains irrelevant material or ignores important details indicates that the system’s relevance needs to be improved. The feedback provided by users helps developers optimise the system to retrieve and produce more targeted content.

Evaluation Methods for RAG

Popular Tools for Measuring RAG Performance

BLEU (Bilingual Evaluation Understudy Score)

BLEU is a popular tool for assessing RAG systems’ performance, especially when it comes to language generation tasks. In order to find words and phrases that match, the system’s output is compared to a collection of reference texts. The BLEU score increases with the amount of overlap between the generated response and the right answer. BLEU is an excellent tool for basic evaluation, but it may not be as effective in measuring fluency or creativity in more difficult tasks.

ROUGE (Recall-Oriented Understudy for Gisting Evaluation)

Another well-liked tool is ROUGE, particularly for jobs like summarisation. It gauges how closely the content generated by the system resembles the reference content. ROUGE specifically examines recall, or the amount of significant information from the reference that is included in the output. Especially for tasks involving summarisation or rephrasing, this tool is excellent for determining whether the RAG model is incorporating the appropriate types of data.

METEOR (Metric for Evaluation of Translation with Explicit ORdering)

By emphasising both precision and recall, METEOR surpasses BLEU and ROUGE. It can distinguish between terms that have the same meaning, such as “smart” and “intelligent,” because it also considers synonymy and stemming. As a result, it performs better when assessing how well a RAG model captures meaning rather than just word-for-word correspondence.

BERTScore

This tool compares the contextual embeddings of generated text with reference text in order to assess RAG systems using a deep learning technique. BERTScore is an excellent option for tasks where comprehending context and semantics is essential because it examines whether the meaning of the response is close to the reference rather than just surface-level matches.

A to Z of Generative AI: An in-Depth Glossary

A form of artificial intelligence called “Generative AI” enables machines to produce text, pictures, and music on their own. By automating activities, improving customization, and encouraging innovation, it is revolutionizing industries by using the power of algorithms and deep learning. This guide will provide you with a solid foundation in Gen AI terminology to help you better understand this exciting field.

Real-World RAG Implementation: Use Cases

Legal Document Analysis

RAG can be used by law firms to evaluate legal documents and offer pertinent case law citations. The RAG system retrieves relevant legal texts and generates a summary that includes case outcomes and interpretations when a lawyer enquires about precedents for a particular case type. This facilitates quicker legal research and strengthens attorneys’ arguments

Content Creation for Marketing

Using RAG, marketing content that is suited to particular audiences can be produced. A marketer can, for example, use a RAG model to create a well-structured blog post about sustainability trends, incorporating the most recent insights to make the content more engaging and relevant. The model retrieves articles on sustainability trends.

E-Learning Platforms

Personalised learning experiences can be offered by RAG in educational environments. When a student has specific questions about a difficult subject, the RAG system finds pertinent course materials, including lecture notes and videos, and provides explanations or summaries that are tailored to the student’s learning preferences. This customised method improves comprehension and memory of the material.

Research Assistant Tools

RAG systems help researchers expedite the process of reviewing literature. The RAG model generates a succinct summary of the findings when a researcher inputs a topic and retrieves pertinent academic papers, articles, and data. This makes it easier for researchers to understand the current state of the art in their field and spot areas that need more investigation.

E-commerce Product Recommendations

RAG can improve product recommendations in e-commerce. The RAG system pulls product specs, reviews, and comparisons in response to requests for recommendations from customers based on past purchases, producing tailored suggestions that suit the customer’s tastes. This raises conversion rates while also enhancing the shopping experience.

Technical Support Systems

Technical support can use RAG for solving problems. When a user describes a problem with a device, the RAG system generates step-by-step solutions by retrieving pertinent technical documentation and troubleshooting guides. Users can now solve their problems without having to wait a long time thanks to this.

How to Choose the Right Platform to Create and Deploy your RAG

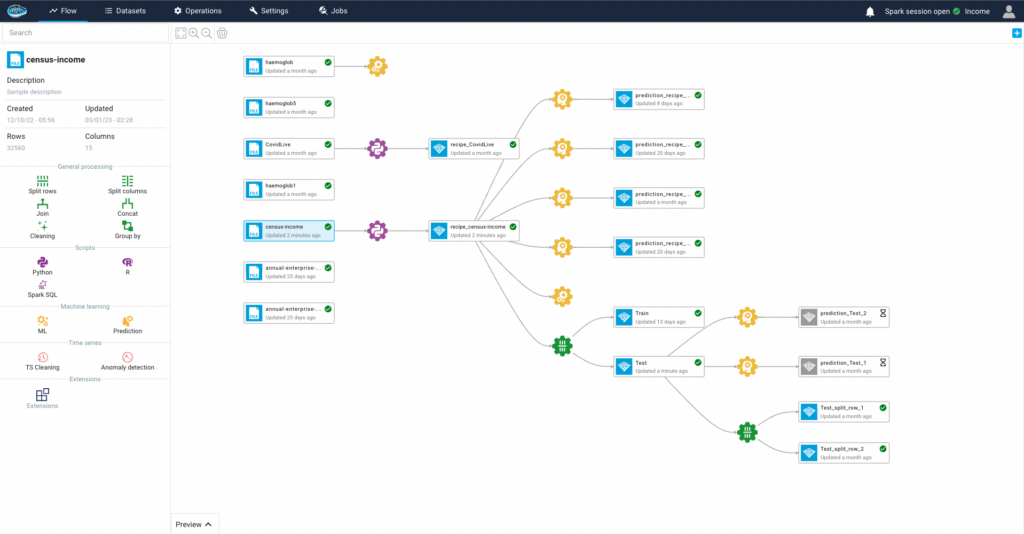

Overview of papAI 7

papAI is a comprehensive AI solution designed to facilitate the seamless deployment and scaling of data science and AI projects by businesses. papAI was created with teamwork as its primary focus. It enables groups to collaborate effectively on a single platform, simplifying challenging jobs and optimizing workflows.

It provides a comprehensive set of tools, including sophisticated machine learning methods, model deployment choices, data visualization, exploration, and robust data cleaning capabilities—all conveniently located in one location. Organizations can take on their most difficult projects with PapAI and maintain their agility and competitiveness in the rapidly changing AI market.

No-Code & Low-Code Approach

Primarily, papAI offers an intuitive interface that streamlines the entire process of creating and maintaining RAG models. The platform is easy to use even for non-technical users, so a wider range of users—from startups to well-established businesses—can access it. Teams can concentrate on improving their applications instead of getting bogged down in intricate details thanks to this ease of use.

The Power of Scalability

Scalability is considered in the design of papAI. The platform easily adjusts to your needs, regardless of whether you’re starting with a small project or hoping to scale up for large-scale production use. papAI guarantees that your RAG system can manage variable loads of real-time requests without sacrificing performance by employing cloud-based infrastructure. Businesses that expect growth or varying user demand must benefit from this flexibility, which makes it easy for them to reallocate resources as needed.

Real-time Monitoring & Continuous Improvement

Robust monitoring and continuous improvement features are highlighted by papAI platform. The performance of their RAG system can be easily tracked by users, who can learn about response accuracy, user engagement, and areas for improvement. Teams can efficiently iterate on their models and make data-driven decisions thanks to this integrated analytics capability. By selecting papAI, businesses get not only a potent RAG deployment tool but also guarantee that their systems continue to function well and be relevant in a constantly evolving digital environment.

Build your own Rag System to Meet your Specific Needs

To sum up, the application of RAG is widespread, indicating its adaptability and efficiency in improving information creation and retrieval. Combining these features enables RAG systems to respond to users in a coherent, accurate, and relevant manner, greatly enhancing productivity and user experience across a range of applications.

See how a RAG system can be tailored to your requirements by scheduling a demo to see papAI in action. Our platform provides the resources and assistance required to design a personalized RAG solution that blends in perfectly with your operations. Set up a demo with papAI right away to discover how it can benefit your company and don’t pass up the chance to improve engagement and operations!

Interested in discovering papAI?

Our AI expert team is at your disposal for any questions

How AgenticAI is Transforming Sales and Marketing Strategies

How AgenticAI is Transforming Sales and Marketing Strategies Agentic AI...

Read More“DATATEGY EARLY CAREERS PROGRAM” With Abdelmoumen ATMANI

“DATATEGY EARLY CAREERS PROGRAM” With Abdelmoumen ATMANI Hello, my name...

Read MoreIntroducing the Error Analysis Tree: A Smarter Way to Improve AI Models

Introducing the Error Analysis Tree: A Smarter Way to Improve...

Read More