The benefits of explicability in AI ethics

Table of Contents

ToggleIn recent years, the notion of ethics has become increasingly prevalent in every industry sector.

Each industry has its own ethical issues, and the data one, specifically AI, is no exception.

As AI is becoming prominent in our personal lives, the need for transparency has become essential. AI models use such a vast volume of data and create such complex neural networks to produce their results, that scientists themselves may not know exactly what is going on in the analyses produced by the algorithms.

It is in this context that the concepts of explicability and interpretability have taken on new dimensions.

It is this “right to explanation” that has led to the emergence of XAI.

1 - What is XAI, and why do we need it ?

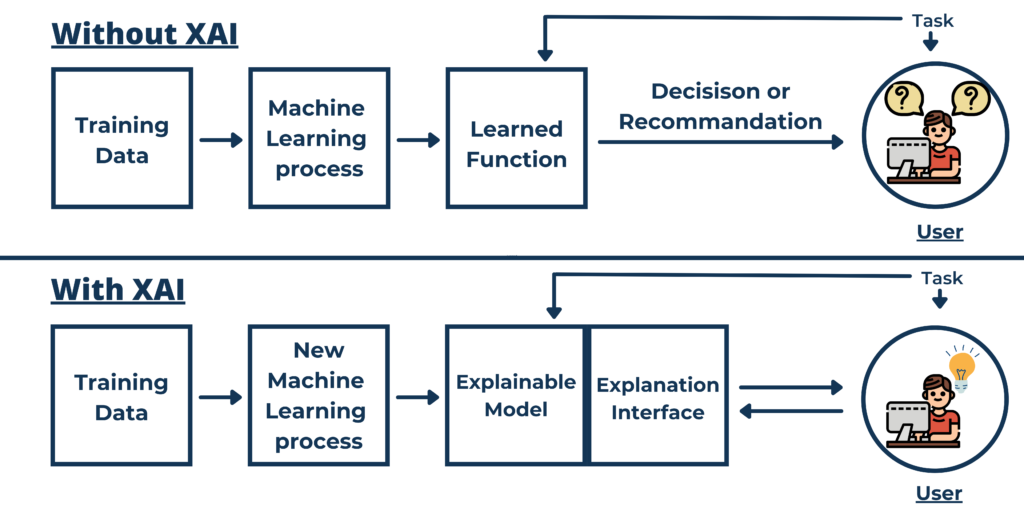

Explicable Artificial Intelligence, also known as XAI, consists of the creation of processes integrated to AI models that helps human users to comprehend the steps and results created by the machine learning algorithms. It’s similar to a “black box” from a plane.

More than just facilitating understanding, XAI allows us to achieve a wide range of other objectives to reinforce the ethical aspects of machine learning projects.

For example, if we ensure that our predictions are unbiased, we prevent discrimination against under-represented groups.

Privacy is also improved, because if we understand the data a model is using, we can prevent it from accessing sensitive information.

Finally, it helps to build people’ trust, because if they can understand how a machine learning model arrives at its decisions, it is easier for them to trust it.

Why do we need XAI ?

2 - What are the differences between Interpretability and explainability for machine learning models ?

These two words are used interchangeably. Although they are similar, there are significant differences between the two terms.

A machine learning model is interpretable if we can figure out how it arrived at a specific solution, meaning that cause and effect can be determined. This is the ability to predict what will happen, given a change in the entry data or algorithmic settings.

A model is explainable if we can understand the internal mechanics of a machine or deep learning system, how a particular node in a complex model technically influences the output,

Interpretability means being able to discern mechanisms without necessarily knowing the reason.

Explainability means being able to literally explain what is going on.

To give an example, imagine that you are performing an experiment. It can be interpreted because you can see what you are doing and observe the result, but it can only be explained when you study the chemistry involved in the experiment. This principle also applies to a machine learning model.

In addition to transparency and ethical issues, understanding how an algorithm actually works can help optimize and facilitate the operations of data scientists and analysts, on the fundamental issues and requirements of their organizations.

3 – What are the limits of XAI ?

Not everyone agrees with the constant use of XAI in AI models.

Skeptics give several reasons, three of which come up quite often.

The first, and most obvious is that AI models are sometimes too complex to explain, let alone for an ordinary user. Hundreds of millions of parameters are involved to make a single decision.

The second is more technical. Indeed, if an AI process is explainable, thanks to additional parameters that allow it, it will require a large amount of work from the software, at the risk of reducing its performance and therefore, of proposing a less accurate model. However, software are becoming more and more powerful, making this argument less valid.

The last argument is more philosophical and differs from a technical issue. Humans cannot explain all their decisions. It turns out that most human thinking and decision-making mechanisms occur unconsciously.

If we are not able to explain everything, can we also expect the same from an artificial intelligence ?

4 – The Explainable side of papAI

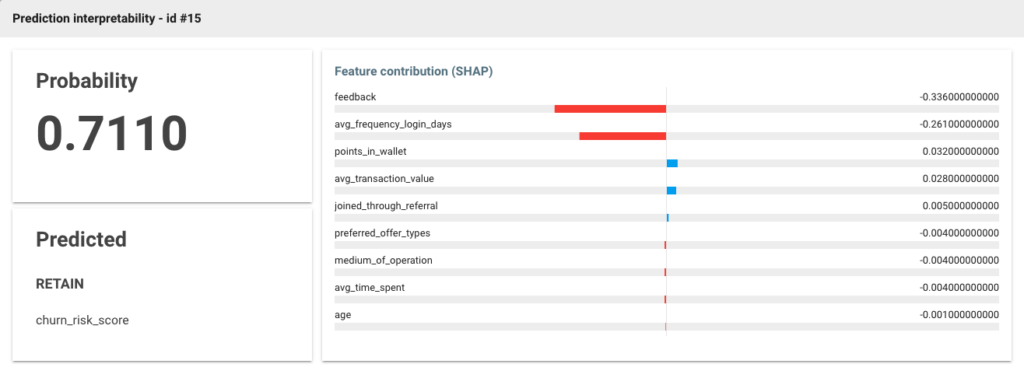

Let’s take a marketplace use case.

You want to predict whether new customers will continue to use your website or leave for the competition.

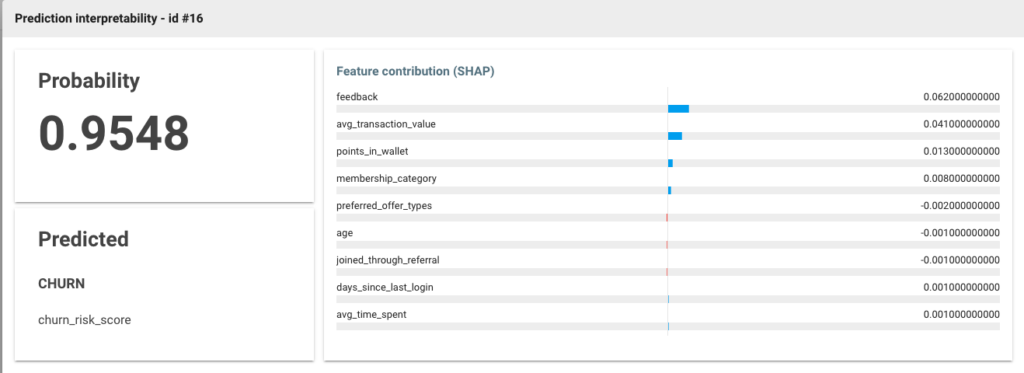

From the customer base, you train a satisfactory model. With papAI you can then access the “local interpretability” which allows you to explain the understanding of the model for each customer.

For this customer, for example, his feedback was “Reasonable Price” and he logged on to your site an average of 3 times a day.

As you can see in blue, the positive class is the CHURN class. The model deduces that he will stay on your site, despite the low number of points in the wallet (0) and the average transaction value which make the model put the probability of belonging to the RETAIN class into perspective at 71%.

Interested in discovering papAI?

Our commercial team is at your disposal for any question !