How RAG Systems Improve Public Sector Management The most important...

Read MoreHow RAG Systems Improve Public Sector Management

Table of Contents

ToggleThe most important tool that any industry needs to enhance operations and customer service is artificial intelligence. RAG (Retrieval-Augmented Generation) has quickly become a crucial tool for enhancing public administrations’ efficiency, transparency, and decision-making in this context of change. However, this industry is well known for its poor acceptance rate and difficulties embracing new technology.

This article will explain what RAG is and why it matters for government and public services.

RAG Systems in Public Administration: What's the overview?

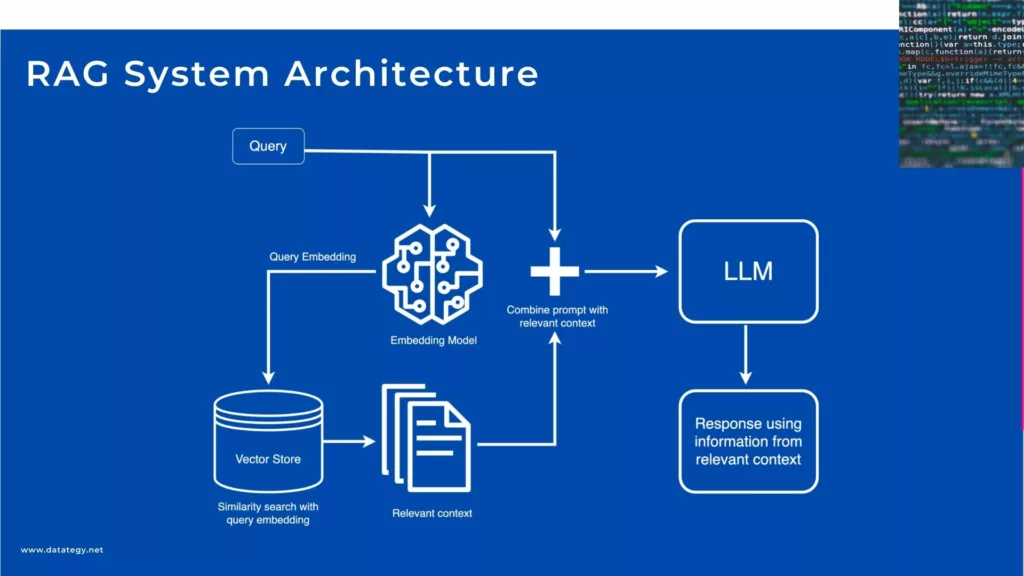

Large language models (LLMs) and real-time data retrieval are two aspects of AI design that are combined in RAG (Retrieval-Augmented Generation) systems.

Before producing a response, RAG models can explore other databases or document collections to retrieve pertinent, current information rather than on only on pre-trained knowledge. This method greatly improves output accuracy and relevance, particularly when choices call for up-to-date or domain-specific data.

The capacity of RAG systems to connect trustworthy, often regulated information sources with AI’s generating capability is what distinguishes them in the public sector. This promotes accountability and openness, two cornerstones of efficient government, in addition to increasing faith in technology.

RAG systems have the potential to be important instruments in enhancing the agility, knowledge, and citizen-centricity of public institutions as digital transformation initiatives proceed.

What Are a RAG System's Fundamental Elements?

Embedding Models

Embedding models, which transform raw text into dense vector representations that let computers to understand and manipulate word and phrase meaning, are a crucial component of RAG systems. Quick generation and retrieval of relevant information is made possible by these embeddings, which capture the semantic relationships between words. It is essential to select an embedding model that is suitable for the specific task at hand.

For instance, models like BERT or GPT are commonly used because of their ability to provide contextual embeddings of the highest calibre. Retrieval accuracy and answer relevance are improved by optimizing these models on task-specific data since they capture the nuances of the field.

Retrievers Systems

In a RAG system, the retriever is the first step of the RAG pipeline and is responsible for locating and obtaining relevant information from a vast amount of data. Sparse retrieval and dense retrieval are the two main methods that retrievers often use. Sparse retrieval, like BM25, is based on keyword matching and is ideal for large document searches where speed is a major consideration.

On the other hand, dense retrieval enhances its capacity to capture query context and complexity by matching learned embeddings with semantic meaning. The ability of the retriever to select relevant, high-quality data is critical to the overall performance of the system, since it directly impacts the quality of the response generated by the subsequent generation.

Generators

The generator in a RAG system is responsible for converting the relevant information that the retriever has acquired into text that is grammatically correct, appropriate for the context, and readable by humans. A powerful generator is necessary when dealing with complex queries or large amounts of data in order to ensure that the RAG system produces results that appear authentic.

Generators frequently use large language models, such as GPT or T5, to generate text based on the collected data. Certain output types, such as creating debates, summarising papers, or answering questions, can be better handled by modifying these models.

RAG System Architecture

What are the Key Benefits of RAG Systems in Public Administration?

Improved Accuracy in Data Retrieval

The capacity of Retrieval-Augmented Generation (RAG) systems to greatly improve data retrieval accuracy is one of their most notable advantages in public administration. Conventional AI models depend on their training data, which is frequently out-of-date or lacks the specificity needed for government operations.

RAG systems alter that by dynamically extracting data from databases that are updated or live before producing a response. Public employees may now obtain the most up-to-date, pertinent information rather of relying on antiquated manuals or static reports. To guarantee that the information given is correct and in line with current standards, a RAG system may, for example, get the most recent housing rule or financial aid guideline immediately from the pertinent database if a public employee requires it.

In public administration, where choices frequently impact huge populations and must comply with legal frameworks, this degree of accuracy is particularly crucial. Errors brought on by inaccurate information or out-of-date references may result in delays in policy, public unhappiness, or even legal problems. Agencies can reduce these risks and promote confidence in their internal and external communications by using RAG systems.

Personalization of Knowledge Bases

Working with specialized knowledge repositories is one of RAG systems’ strong points. Various divisions in public administration frequently rely on extremely specialized information that isn’t pertinent or available to the broader public, such as municipal ordinances, legal codes, internal memoranda, and urban planning rules. These internal data sources may be used to customize RAG models, guaranteeing that answers are not only correct but also suitable for the particular requirements of the agency.

This degree of personalization encourages relevance in each contact and helps get rid of generic outputs. The system may modify its outputs to conform to department-specific regulations and terminology, whether it is a policy analyst creating a report using internal datasets or a helpdesk chatbot assisting citizens with tax declarations. It turns into a dynamic, searchable library that develops and changes in tandem with the organization’s expertise.

Enhanced Efficiency in Information Processing

Government offices are known for handling enormous amounts of documents, reports, and paperwork. Much time is spent searching through data, whether it is for processing applications, answering questions, or creating policy papers.

This entire process is made more efficient by RAG systems. These systems enable staff members to locate and comprehend information far more quickly than they could with manual techniques or conventional search engines by combining the speed of language models with the accuracy of data retrieval.

Consider the scenario where a municipal administrator must present a briefing on trends in energy use. Rather to sifting through several spreadsheets and PDFs, the RAG model can quickly extract and condense the most important information.

Time is saved, cognitive strain is lessened, and public employees may concentrate on strategic activities that call for human judgment. Better service delivery, faster reaction times, and more flexible decision-making are all possible outcomes of this increased efficiency over time.

Low-Cost Information Administration

Budgets for the public sector are limited, and governments are always under pressure to accomplish more with less. RAG systems are a more affordable option than investing in extensive data infrastructure or recruiting more employees.

They minimize the possibility of human mistakes and eliminate the need for repeated manual labor by automating portions of the research, writing, and communication processes.

A well-structured RAG model may fulfil several functions, from document summaries and citizen help to legal analysis and compliance checks, rather of creating distinct systems for every department’s requirements.

Over time, this improves the caliber and accessibility of public services while saving governments money on software, training, and operating expenses. For contemporary governments hoping to innovate without going over budget, it’s a clever, scalable answer.

What are the Key Applications of RAG in Financial Services?

1- Enhanced Citizen Services and Information Access

Chatbots and Virtual Assistants Driven by AI

Consider how important it is to comprehend the most recent laws in your area before launching a small business. A RAG-powered chatbot can give you accurate answers by referencing particular pages and parts of the pertinent law, saving you hours of hunting through government websites.

In a casual, conversational style, it can help you understand your tax responsibilities, navigate application procedures, and provide answers to frequently asked questions regarding your eligibility for social programs. The “augmentation” component is crucial in this case since the chatbot actively retrieves the most pertinent material from official government databases and papers in real-time to create its replies rather than merely depending on its previously learned information, which may be out-of-date or lacking.

In the end, this increases citizen happiness and trust in public services by producing help that is more accurate, dependable, and contextually relevant. It increases the system’s overall efficiency by freeing up human agents to address more delicate or complicated cases.

Personalized Information Delivery

Every citizen has a different situation. A older adult asking about pension plans will require different information than a young family filing for daycare subsidies. By knowing the context, previous encounters, and unique circumstances of each user, RAG may potentially personalize the way information is delivered.

Imagine accessing a government portal and seeing the most pertinent paperwork, changes, and deadlines based on your past inquiries and profile. In order to give a customized experience, RAG may accomplish this by deftly combining data from many government databases, all the while abiding by stringent privacy laws. This proactive, individualized strategy can lessen the sense of being lost in a convoluted bureaucratic system and greatly increase citizen involvement.

2- Streamlining Internal Operations and Knowledge Management:

Efficient Information Retrieval for Employees

Consider a situation when a policy analyst has to know the background information on a certain rule. An analyst may obtain a thorough understanding in minutes by using a RAG-powered internal search engine to swiftly retrieve all pertinent memoranda, reports, and previous interpretations, saving hours of time spent searching through old records and disjointed databases.

In a similar vein, a caseworker handling a complicated case may quickly consult all pertinent policies, court rulings, and client records, enabling them to make quicker and better choices. This improved information availability empowers workers, cuts down on research time, minimizes mistakes, and eventually boosts government operations’ overall effectiveness.

Enhanced Document Analysis

Contracts, policy papers, legal texts, and citizen correspondence are just a few of the vast amounts of paperwork that government institutions handle. It is crucial but frequently time-consuming to analyze these papers in order to extract important information, find pertinent phrases, and summarise the material. RAG can greatly improve this procedure.

To find common provisions, possible hazards, or places for standardization, for instance, a huge collection of contracts may be analyzed using a RAG system. For busy authorities, it helps emphasize the most important points from long policy texts. Alternatively, it can examine citizen input to find themes and areas that need development. This capacity to effectively analyze and comprehend vast amounts of material offers insightful information for policy implementation and decision-making.

3- Improved Decision-Making and Policy Formulation:

Policy Recommendations

To fully grasp RAG’s revolutionary potential in creating successful policies, take into account the complex balancing act between learning from the past and comprehending the present. Policymakers frequently face a mountain of data, ranging from lengthy scholarly articles with intricate statistical analysis to the constantly fluctuating tides of public opinion reflected on various social media platforms.

At the same time, students have to comprehend the intricacies and effects of the current legal system. As a clever research helper, RAG can quickly and accurately navigate through this enormous ocean of data. Think of a policy analyst who is charged with creating plans to counteract climate change.

With RAG, they could quickly obtain the most pertinent scientific research on reducing emissions, examine social media opinion towards different green projects, and compare this data with current environmental laws and their track record of success.

This combined knowledge, which is supported by data and placed in the context of the current legal system, enables decision-makers to go beyond intuition or anecdotal evidence to create well-informed policies that are also more likely to be well-received by the general public and produce the desired results.

Real-time Insights for Strategic Decisions

Think about how modern government is dynamic, requiring quick and well-considered reactions to unanticipated events and quickly changing social requirements. RAG gives government officials a comprehensive, up-to-date picture of the changing environment, enabling them to go beyond reactive responses.

In the event of an unexpected economic slowdown, a system driven by RAG could instantly compile and examine current economic data, social media opinions about financial difficulties, and news articles describing the crisis’s effects on different industries. At the same time, it could retrieve and compile information about how successful previous economic stimulus plans were.

Leaders are able to see the problem more clearly and create focused, evidence-based solutions far more quickly than they could with previous techniques because to this near real-time integration of disparate data. Strategic decision-making is transformed from a potentially fragmented and delayed process into a proactive and agile response mechanism by the capacity to foresee possible escalations, comprehend public reactions in real-time, and quickly learn from past examples.

What is the Challenge in implementing RAG in Public Organizations?

Though it has great potential to improve efficiency and citizen services, implementing Retrieval-Augmented Generation (RAG) in public organisations comes with a special set of challenges that should be carefully considered:

The Importance of Fine-Tuning in RAG

It’s important to realize that a Large Language Model (LLM) is essentially a complex language layer. Its main purpose is to convert information into comprehensible, human-readable prose, whether that information is a direct query or context that has been obtained in a RAG system. Imagine it as an articulate speaker who can respond based on the information provided.

However, particularly in complex fields like public services, this innate language capacity does not always convert into precise, pertinent, or contextually acceptable responses for particular applications. This is the point at which the LLM, whether it is functioning independently or in conjunction with a contextual layer such as RAG, has to be adjusted.

An LLM still has to be “taught” how to use the context efficiently, even if it is a part of a RAG system, where the retrieval mechanism is made to offer the most pertinent contextual information. It must be able to recognize the most important details in the documents that are retrieved, properly synthesize them, and structure the response according to the audience and use case. Without fine-tuning, the LLM may have trouble prioritizing information, misunderstand subtleties in the text that was collected, or produce replies that are overly general or devoid of the necessary specifics.

Five Fine-Tuning Strategies for RAG Components

Fine-tuning Embedding Models with Domain Specificity in Mind

Optimizing a RAG system for certain activities or sectors requires fine-tuning embedding models for domain specialization. The purpose of embedding models is to transform textual input into dense vector representations that capture word associations and semantic meanings. Pre-trained models, such as BERT or GPT, are less useful for specialized applications that ask for domain-specific expertise since they are usually trained on huge, generic datasets.

By exposing these models to a smaller, domain-specific corpus, fine-tuning helps close this gap by enabling them to more accurately represent the vocabulary, subtleties, and context of the subject. Optimizing embedding models guarantees that the system can comprehend and interpret domain-specific language, whether in the fields of healthcare, finance, or law. This enhances the precision of information retrieval and the caliber of the generated outputs

Supervised learning is used in the fine-tuning step when a labeled dataset pertinent to the particular domain is used to train the model. These might be legal documents for a task involving the law or medical research articles for a healthcare application. The model’s parameters are changed during fine-tuning in order to better reflect the context and vocabulary semantics of the domain.

Direct Preference Optimization (DPO)

To enhance a model’s performance on a particular job, Direct Preference Optimisation (DPO) is a fine-tuning technique that focusses on learning a preference function. The sharpness of the preference learning aim is adjusted by the preference temperature parameter introduced by DPO, in contrast to conventional fine-tuning methods that only use loss minimization over labeled datasets.

The degree to which the model favors some outputs over others can be adjusted by researchers using this parameter. When it comes to text creation or ranking systems, for example, DPO can adjust the model to better match the intended results by learning to favor particular kinds of replies. This makes it especially helpful for jobs like recommendation systems and conversational AI that call for subjective or nuanced judgments.

By comparing outputs, DPO iteratively enhances the model’s preference for task-aligned outcomes. In recommendation systems, for example, the model may be tuned to give precedence to outcomes that are both contextually relevant and correct. This method guarantees that the behaviour of the model is closely matched with certain goals, improving its applicability and task performance as a whole. Developers may better control the model’s outputs and make more focused and precise optimizations by utilizing DPO.

Low-Rank Adaptation (LoRA)

A fine-tuning technique called Low-Rank Adaptation (LoRA) makes it possible to alter big pre-trained models in an effective and scalable manner. LoRA adds tiny, trainable low-rank matrices to the network in place of changing the whole set of model parameters. Only a portion of the parameters are changed by these matrices, enabling task-specific customization while maintaining the majority of the model’s initial weights.

LoRA is a great option for modifying large models like GPT or BERT to fit specialized domains because of its selective parameter adjustment, which lowers computational overhead and lowers the chance of overfitting. For example, LoRA preserves the broad knowledge of the original model while concentrating primarily on domain-relevant modifications when fine-tuning a language model to specialize in legal or scientific material.

LoRA allows fine-tuning even in contexts with limited resources without compromising accuracy or performance since it is lightweight, resource-efficient, and effective.

Improved Vector Search in RAG Systems

A key component of the retrieval system in a RAG (Retrieval-Augmented Generation) architecture is vector search, also known as semantic search. This part is in charge of finding and collecting pertinent facts from big external knowledge bases, giving the language model the context it needs to produce outputs that are precise, logical, and contextually correct. Vector embeddings, which are high-dimensional numerical representations of words, phrases, or documents that capture their semantic meaning rather than simply their surface-level keywords, are used by the retrieval system to do this.

Using an embedding model, such BERT or sentence transformers, the system first converts a query into a vector representation in vector search. The context, subtleties, and intended meaning of the question are all captured in this vector. A database of pre-computed vectors from texts, articles, or knowledge bases is then compared with this query vector by the retrieval system.

The algorithm ranks and returns the most pertinent texts or passages that closely match the semantic meaning of the query by utilizing similarity metrics like dot product or cosine similarity. This makes it possible for the retrieval system to take into consideration the deeper meanings and relationships between phrases in addition to keyword matching.

Enhancing Generators for Contextual Relevance

Fine-tuning a generator on task-specific data is essential to improving it for contextual relevance. It is necessary to expose the model, which is frequently built on sizable pre-trained architectures like GPT or T5, to a range of example scenarios pertinent to the field in which it will be employed. For example, a dataset of previous client questions and answers might be used to improve the generator in a customer support program.

This enables the model to learn how to respond to various client problems, decipher the subtleties of inquiries, and produce useful and contextually relevant answers. The model gains a better understanding of domain-specific scenarios, specialized vocabulary, and the subtleties of tone or formality required in the outputs through fine-tuning.

Adding features that enable the generator to concentrate on the most pertinent data that the retrieval system has collected is another crucial tactic for improving it. The generator should be taught to recognize and highlight the most important elements of the retrieved information, rather than merely producing text based on a general context.

In order to keep the answer on topic and prevent it from veering into irrelevant or peripheral material, this may include making use of attention mechanisms, which enable the generator to “attend” to particular portions of the input more forcefully. Furthermore, the generator’s replies may be guided to adhere to predetermined patterns by using structured prompts or templates, which enhances coherence and relevancy.

Why is LAFT (Layer Augmentation Fine-Tuning) the most effective approach?

Layer Augmentation Fine-Tuning (LAFT) is a really smart way to make Large Language Models (LLMs) better for specific tasks without messing with how they fundamentally work. It’s like adding new, trainable sections to both the part that understands the input (encoder) and the part that generates the output (decoder), while keeping the main “brain” of the model untouched.

This lets the model learn about specialized areas like finance, law, or tech without losing its general smarts. Unlike regular fine-tuning that changes everything and can make the model forget old stuff or become too focused on one thing, LAFT keeps the original knowledge safe while allowing it to learn new tricks in a controlled way. Plus, LAFT is easier on computers and the training process is more stable because it only trains the new sections.

This is super helpful for finance companies that need to quickly adapt to new rules and market changes. Also, keeping the main model frozen means updates are more reliable and less likely to cause unexpected errors in important financial applications.

“With LAFT, we bridge the gap between adaptability and stability, enhancing domain-specific performance without compromising the foundational intelligence of large language models.”

Mohamed Elhawary

R&D Data Scientist

A to Z of Generative AI: An in-Depth Glossary

This guide will cover the essential terminology that every beginner needs to know. Whether you are a student, a business owner, or simply someone who is interested in AI, this guide will provide you with a solid foundation in AI terminology to help you better understand this exciting field.

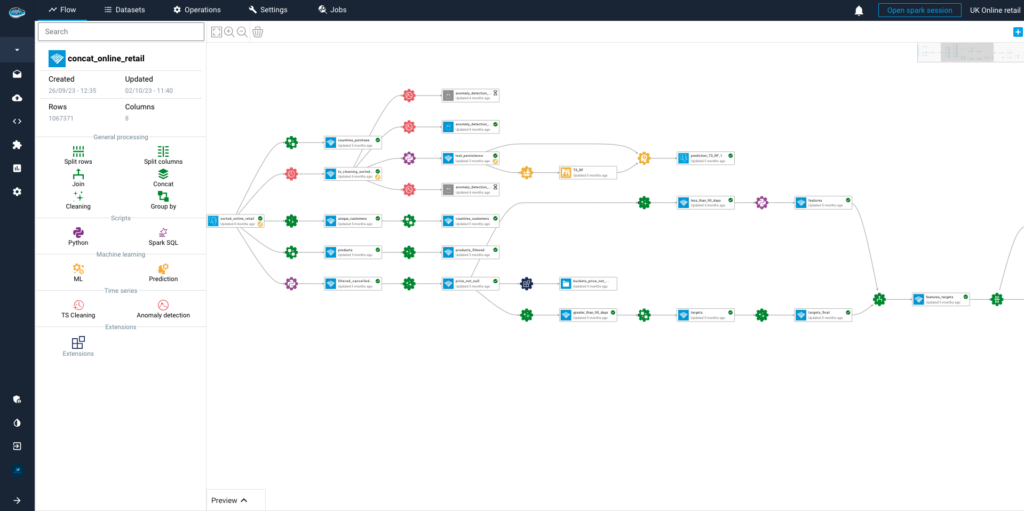

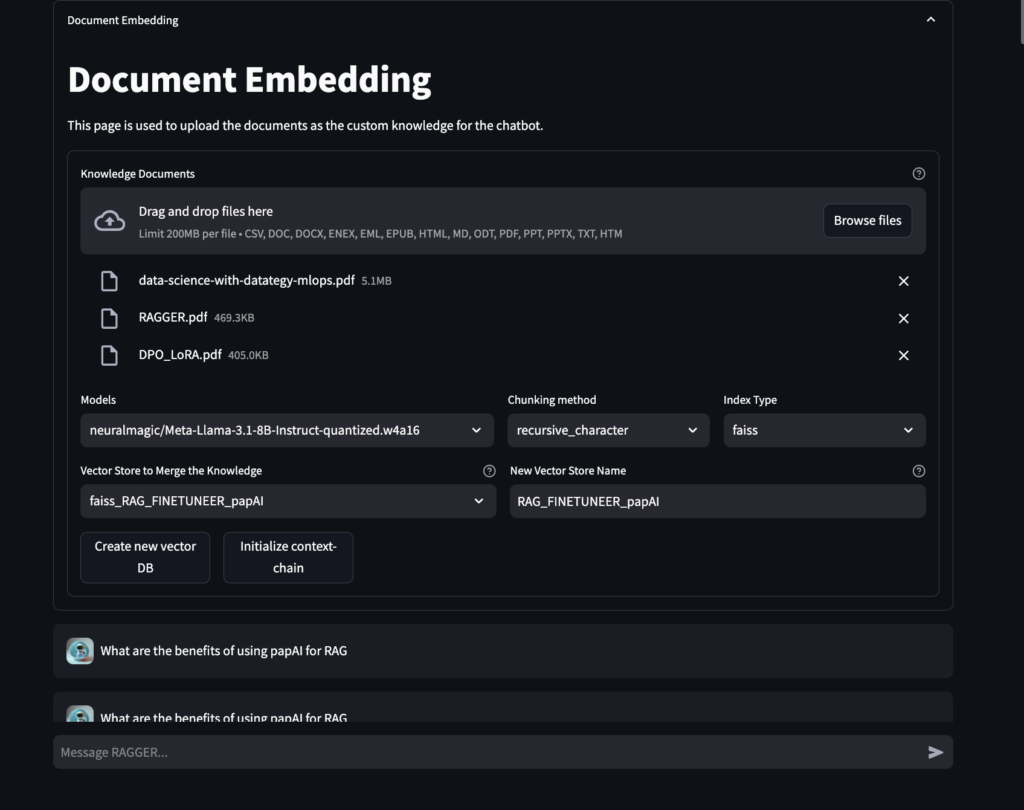

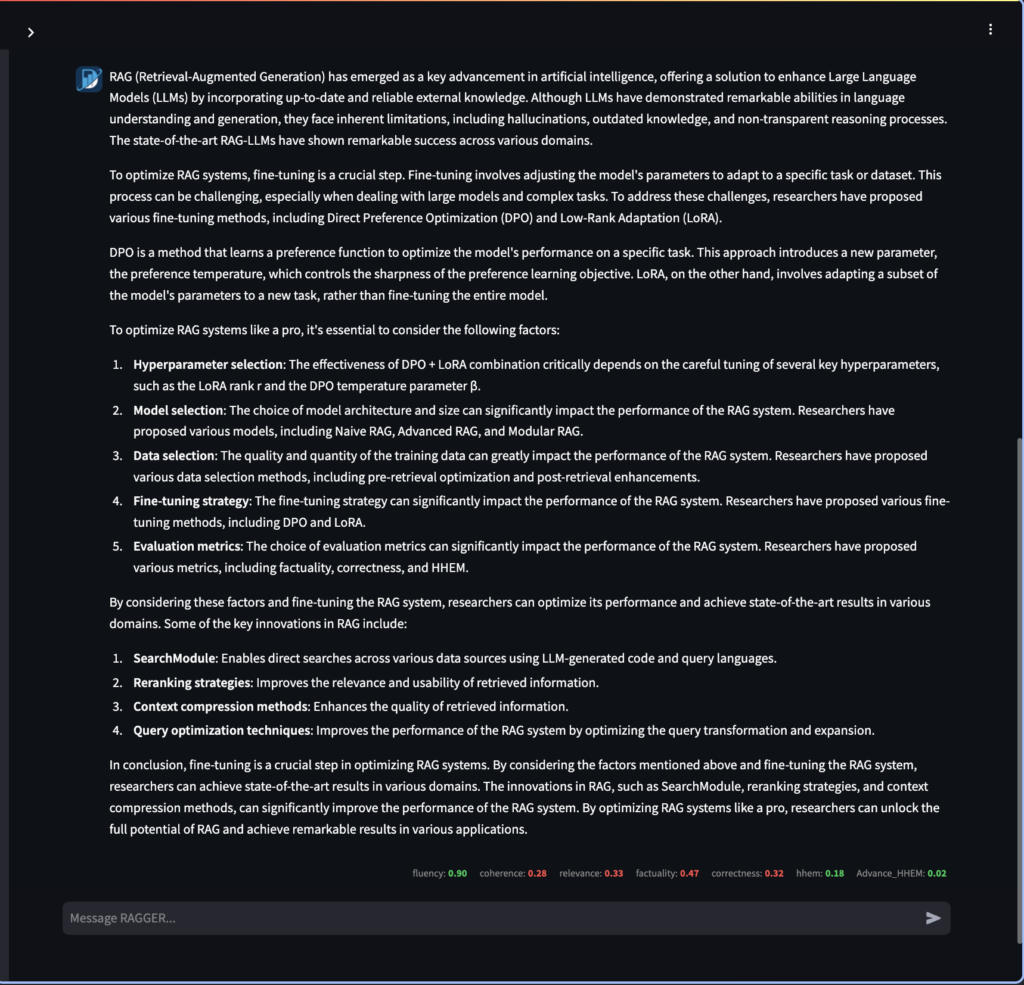

How papAI Helps you to Build Rag Systems ?

papAI is an all-in-one artificial intelligence solution made to optimize and simplify processes in various sectors. It offers strong data analysis, predictive insights, and process automation capabilities.

Here’s an in-depth look at the key features and advantages of this innovative solution:

Optimizing Document Retrieval

papAI-RAG combines papAI’s sophisticated capabilities for preprocessing with an improved asynchronous vector database search, enabling a powerful dual-approach system for seamless retrieval. papAI’s modular workflow would handle chunking and indexing while maintaining clear data lineage with its hyper-layered approach that enables multi-granularity search across different data levels.

The key benefits include significantly faster retrieval times (64.5% latency improvement), better data quality through papAI’s preprocessing, and more comprehensive search results through the combined retrieval methods.

Response Generation

the combines papAI-RAG leverages papAI’s data validation and quality control features alongside advanced weighted reciprocal rank fusion and reranking methods for context selection and response generation. Benefits include reduced hallucination rates, improved response relevance, better explainability through visualizations, and a 66.6% reduction in energy consumption compared to traditional RAG approaches.

Create your Own Rag to Leverage your Data using papAI solution

In conclusion, while RAG offers a transformative potential for public services, its successful implementation requires careful navigation of a complex landscape of technical, data-related, ethical, organizational, and financial challenges.

Discover how a RAG system, tailored to your organization, can drive the next phase of your operations Schedule a demo to learn more.

RAG (Retrieval-Augmented Generation) is an AI design that combines large language models (LLMs) with real-time data retrieval. Unlike traditional AI models that rely solely on their pre-trained knowledge, RAG models can explore external databases or document collections to retrieve relevant and current information before generating a response.

- Embedding Models: These transform raw text into dense vector representations, enabling computers to understand the semantic meaning of words and phrases for efficient information retrieval.

- Retrievers Systems: These are responsible for locating and obtaining relevant information from vast amounts of data using methods like sparse retrieval (keyword matching) and dense retrieval (semantic meaning matching).

- Generators: These convert the retrieved relevant information into grammatically correct, contextually appropriate, and human-readable text, often utilizing large language models.

Fine-tuning optimizes RAG systems by enhancing retrieval accuracy, domain-specific precision, and output coherence. For instance, fine-tuning retrievers ensures that the system selects highly relevant information from external sources, while fine-tuning generators improve the clarity and relevance of the responses. This process helps adapt RAG systems to specific tasks and industries like healthcare, legal, or finance.

Layer Augmentation Fine-Tuning (LAFT) is an effective approach to enhance Large Language Models (LLMs) by adding trainable layers to both the encoder and decoder while keeping the base model’s core architecture and parameters frozen.

Interested in discovering papAI?

Our AI expert team is at your disposal for any questions

Scaling RAG Systems in Financial Organizations

Scaling RAG Systems in Financial Organizations Artificial intelligence has emerged...

Read MoreHow AgenticAI is Transforming Sales and Marketing Strategies

How AgenticAI is Transforming Sales and Marketing Strategies Agentic AI...

Read More“DATATEGY EARLY CAREERS PROGRAM” With Abdelmoumen ATMANI

“DATATEGY EARLY CAREERS PROGRAM” With Abdelmoumen ATMANI Hello, my name...

Read More