How Law Firms Use RAG to Boost Legal Research RAG...

Read MoreTop MLOps Challenges for Startups & Enterprises in 2025

Table of Contents

ToggleIn today’s fast-changing technology world, some parts of AI are very important for successful use. One of these parts is Machine Learning Operations, or MLOps. MLOps helps to simplify and automate the machine learning process. It is crucial for organizations that want to turn AI models from the lab into real-world applications.

It’s not simply about deploying a model once; it’s about establishing a robust and repeatable process for continuous integration, continuous delivery, and continuous training, ensuring models remain accurate, relevant, and performant over time.

MLOps provides the framework for managing the complexities of data pipelines, model versioning, performance monitoring, and retraining, enabling teams to iterate quickly and efficiently. Without MLOps, AI initiatives often struggle to scale, facing challenges like model drift, integration bottlenecks, and a lack of clear governance, ultimately hindering their ability to deliver meaningful business value.

The global MLOps (Machine Learning Operations) market was valued at USD 1.7 billion in 2024 reflecting the significant demand for efficient deployment and management of machine learning models across various industries.. source gminsights

This article examines the approaches taken by startups and large enterprises to address the challenges of MLOps.

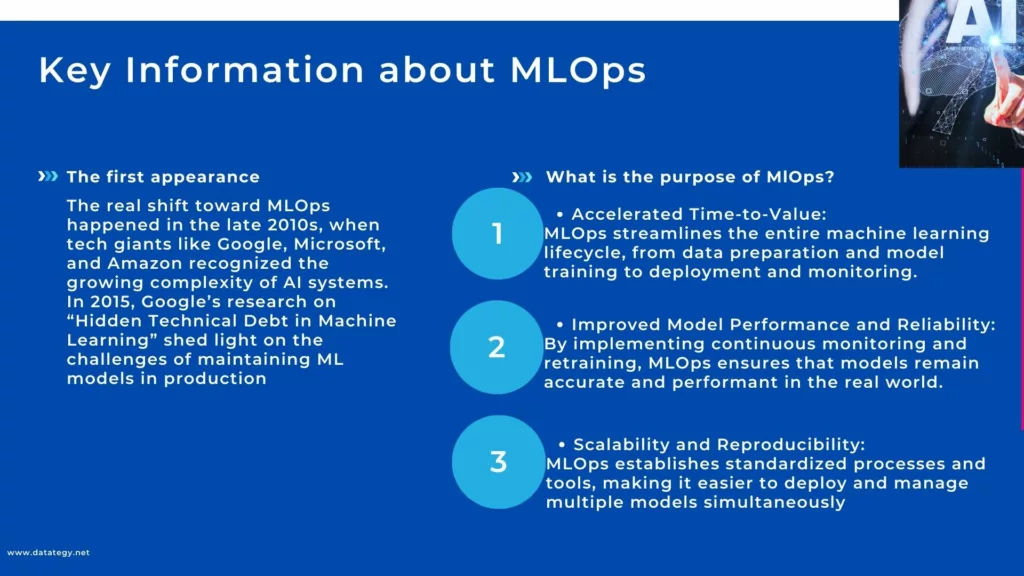

Where Did MLOps Come From? A Look at Its Origins

The increasing difficulty of implementing and maintaining machine learning models in operational settings led to the development of MLOps, or Machine Learning Operations. At first, machine learning was mostly limited to research and experimental environments, where data scientists created and tested models by hand without following established deployment procedures.

But when businesses started incorporating AI into practical uses, it became clear that handling the full ML lifecycle from data preparation to model training, deployment, and monitoring, required an organized approach. As a result, DevOps-inspired approaches were adopted early on, modifying established practices for software engineering to meet the particular difficulties presented by machine learning systems.

Companies like Google, Microsoft, and AWS, who understood the operational challenges of growing AI systems, were the driving forces behind the MLOps concept’s early adoption in the late 2010s. With its 2015 publication on “Hidden Technical Debt in Machine Learning Systems,” which emphasized the difficulties of sustaining ML models after they are first deployed, Google in particular significantly influenced MLOps.

Organizations faced challenges including model drift, repeatability, and data scientist-engineer cooperation as machine learning pipelines grew increasingly intricate. MLOps frameworks and technologies like TensorFlow Extended (TFX), MLflow, and Kubeflow were developed to overcome these obstacles and provide ML model automation, monitoring, and version control.

What does MLOps mean?

On Wikipedia MLOps (machine learning operations) is a discipline that enables data scientists and IT professionals to collaborate and communicate while automating machine learning algorithms.

In order to guarantee the effective deployment, monitoring, and administration of machine learning models in production, a collection of procedures known as MLOps, or Machine Learning Operations, bridges the gap between data science and IT operations. Similar to how DevOps revolutionized software development by using continuous integration and automation, MLOps extends these concepts to the machine learning lifecycle. that our definition at Datategy.

It ensures that AI systems are dependable and flexible in the face of changing circumstances by covering everything from data preparation and model training to deployment, scalability, and performance monitoring. Organizations frequently suffer from disjointed workflows, ineffective teamwork, and models that deteriorate over time without enough supervision in the absence of MLOps.

Key Information about MLOps

What are the Benefits of using MLOps in the organization?

Better Collaboration Between Teams

For AI to be deployed successfully, data scientists, engineers, and IT operations must work together seamlessly. These teams, however, frequently operate in silos inside organizations, which causes misunderstandings, inefficiencies, and longer project completion times. Data scientists may create strong models, but they frequently don’t make it into production if engineering teams don’t properly integrate them.

By implementing common tools, standardized procedures, and transparent communication workflows, MLOps serves as a link between these teams. Workflow automation lessens reliance on manual handovers, while version control systems like Git guarantee that many stakeholders can monitor changes. Faster experimentation, fewer mistakes, and a more seamless transition from study to practical application are the results of this.

Scalability & Efficiency

Regardless of size or location, businesses can easily install, operate, and update models thanks to MLOps’ scalable infrastructure. Dynamically scaling resources in response to demand is made simpler by cloud-based solutions and containerisation technologies like Kubernetes and Docker. A multinational bank that uses AI for fraud detection, for instance, may implement models in several locations while maintaining their consistency and currency.

MLOps optimises resource use in addition to technological scalability. Businesses may manage resources effectively, minimizing operating expenses and optimizing AI-driven insights, rather than squandering computing capacity on obsolete or redundant models.

Improved Model Performance & Reliability

By implementing automatic warnings and continuous monitoring, MLOps removes uncertainty from operating AI models and provides organisations with a real-time view of their performance. Think of MLOps as your AI’s equivalent of a doctor continuously checking a patient’s vital signs. Using a model and hoping for the best is insufficient. Over time, a model’s accuracy may be impacted by changes in user behavior, market conditions, and data. MLOps closely monitors these models by monitoring important metrics such as recall, accuracy, and precision. MLOps doesn’t stand by and let a model perform poorly, for as when a patient’s vital signs decline.

Beyond just reacting to current issues, MLOps also provides valuable insights into past performance through comprehensive logging and auditing. Think of it as a detailed medical history. By meticulously logging model behavior, including inputs, outputs, and performance metrics, MLOps creates a traceable record

What are the MLOps challenges for small businesses/startups?

Hiring Talent

Top AI workers are being aggressively sought after by tech giants, research institutes, and huge corporations in the fiercely competitive field of machine learning. Due to their smaller hiring budgets and less prospects for expansion, small organizations frequently find it difficult to recruit qualified data scientists, MLOps engineers, and DevOps specialists.

Professionals with experience in cloud computing, CI/CD pipelines, Kubernetes, and ML model monitoring are especially sought after, as the need for MLOps talent has increased dramatically in recent years. Unfortunately, the high pay, perks, and chances for professional progression that larger companies provide are sometimes too good for small enterprises to match. Because of this, they either have trouble hiring top talent or have significant employee turnover rates as workers depart for higher-paying positions.

Furthermore, employees may find it challenging to keep current with the most recent developments in MLOps at small enterprises due to the absence of formal training programs and mentorship possibilities that bigger organizations offer. The absence of senior mentorship and collaborative AI teams can impede growth and result in inefficiencies in deployment and management, even if a small organization is successful in hiring potential AI talent.

To address this issue, small companies can:

- Create a pipeline for future AI talent by collaborating with colleges to offer internships or research opportunities.

- By offering online courses or certifications in MLOps technologies and best practices, you may invest in educating current staff members.

- Use automation to lessen your reliance on specialised MLOps experts by utilising low-code MLOps solutions, cloud-managed ML pipelines, and AutoML.

- To draw and keep elite AI talent, provide equality, remote work choices, and opportunity for professional advancement.

Limitations on Resources

Cloud storage, high-performance computers, automation tools, and security precautions are some of the costly components that make up MLOps. Open-source solutions like MLflow, Kubeflow, or TensorFlow are used by many small enterprises to reduce expenses, but they need to be set up and maintained by qualified engineers, which smaller teams frequently lack.

Furthermore, as data volume and model complexity increase, cloud computing services can become prohibitively expensive, making it challenging for small businesses to maintain long-term AI initiatives.

Time restrictions are just as important as financial ones. Employees in small organizations usually work in lean teams and take on various responsibilities. It is possible for a data scientist to be in charge of both creating and implementing models, which might result in inefficiencies and fatigue.

To address resource concerns, small businesses should:

- To maximize infrastructure budget, give priority to affordable and local cloud options.

- Reduce the cost of software licensing by utilizing open-source MLOps solutions.

- Reduce the amount of human labor and increase productivity by implementing automation.

- To get specialized talents without hiring full-time staff, outsource or work with outside specialists.

Problems with Scalability

Another major obstacle to small firms implementing MLOps is scalability. Small firms frequently begin with small-scale AI initiatives but find it difficult to scale up when demand rises, in contrast to huge corporations that have developed AI infrastructures. Inadequate automation, infrastructure, and scalable data pipelines can significantly impede development and keep companies from reaping the full benefits of artificial intelligence.

Managing growing volumes of data is a major obstacle to scaling MLOps. As a company expands, it gathers more data, necessitating the use of more potent computer power, improved data management systems, and more effective model retraining procedures. Small firms may encounter performance bottlenecks, data silos, and lengthy model training periods without adequate data pipelines and storage solutions, rendering AI implementation ineffective.

In the early phases of their AI journey, a lot of small organizations also rely on manual operations. This could be effective for one or two models, but when several models require frequent maintenance, retraining, and updating, human interventions become unsustainable. Scaling MLOps is practically difficult without automated CI/CD pipelines, version control, and real-time monitoring.

To address scalability concerns, small businesses should:

- Use cloud-based MLOps systems for scalable, pay-as-you-go options.

- To effectively manage ML models, use containerization solutions such as Docker and Kubernetes.

- Reduce human labor and improve performance by automating data pipelines and model monitoring.

- To grow gradually without straining resources, start with tiny, modular AI solutions.

Integrating with Existing Systems

Startups may find it difficult to integrate MLOps with their present systems, particularly if AI or machine learning were not considered while designing their current infrastructure. Attempting to retrofit MLOps into tools, software, or workflows that many firms have already invested in may result in incompatibilities or ineffective, laborious setups. The business’s capacity to swiftly deploy models and expand efficiently may be impacted by this slowdown in the adoption of AI and MLOps methods. There are strategies to get around these obstacles, though.

You can:

- Adopt adaptable, modular MLOps solutions like papAI7 that don’t require significant redesigns and can interface with a range of current tools and systems. This makes it possible for startups to use AI/ML procedures without having to totally give up on their old systems.

- To integrate MLOps technologies with current systems and facilitate data transfer between AI models and legacy applications, use middleware or API-based integration. As a result, there is less disturbance and more seamless integration with less downtime.

- Phase in AI/ML procedures gradually by beginning with less invasive integrations that permit incremental enhancements and little victories. Businesses may adapt to the integration in this way without overburdening their personnel or systems.

What are the MLOps challenges for Large businesses/Enterprises?

Complex Data Management

Managing enormous volumes of data is one of the biggest obstacles to major businesses’ adoption of MLOps. Huge datasets are gathered by large organisations from a variety of sources, such as social media, sensors, transactions, and consumer interactions. Cleaning, storing, and preparing the data for machine learning applications is made more difficult by the fact that this data is frequently dispersed across several systems, unstructured, and different.

Large businesses deal with a variety of data kinds in addition to volume, including unstructured, semi-structured, and structured data that might be in the form of text, photos, logs, or videos.

The complexity of data storage systems is another issue that large businesses face. It might be difficult to guarantee accessibility, security, and uniformity throughout the company when some data is kept on-site and others in cloud or hybrid environments. Data silos brought on by a poorly managed data ecosystem might make it challenging to use all of the data available for insights and model training.

To overcome these obstacles, big businesses can:

- Put in place unified data systems that combine information from several sources into one easily accessible area.

- Use cutting-edge preprocessing and data cleaning techniques to guarantee that your data is clean and prepared for machine learning applications.

- To guarantee uniformity, security, and accessibility across all data sources, create robust data governance structures.

- To store enormous volumes of unstructured data in a scalable and easily accessible manner, use cloud-based data lakes.

Silos inside Organisations

The presence of organisational silos presents a significant obstacle for big businesses using MLOps. Teams in big organisations are frequently separated by department, function, or even geography, which may lead to ineffective workflows, mismatched goals, and communication hurdles. Data scientists, DevOps teams, and business divisions, for instance, might not be able to work together efficiently on machine learning projects due to disparate agendas, work cultures, and platforms.

These silos may result in fragmented AI initiatives throughout the company. A strong machine learning model may be developed by a data science team, but it may not be successfully implemented in production if the team is not properly coordinated with the operations or IT departments. Furthermore, it is more difficult to execute a unified MLOps strategy that facilitates model creation, deployment, monitoring, and maintenance throughout the company when teams are not in sync.

In order to address organisational silos, big businesses can:

- Establish specialised MLOps teams that include data scientists, engineers, and business executives to promote cross-functional cooperation.

- To facilitate open communication and information exchange across teams, use collaboration tools like Slack, JIRA, or Confluence.

- Establish a centralised structure for AI governance to bring all teams together around shared objectives and guarantee that AI projects adhere to standard procedures.

- Adopt agile approaches that promote iterative work and continuous team input to keep projects in line with corporate goals.

Compliance to Regulations

Ensuring regulatory compliance while using machine learning models is a major concern for large organisations, particularly those in regulated areas like healthcare, banking, and telecommunications. Strict regulations pertaining to data security, privacy, and transparency apply to many businesses; examples include HIPAA in the US and GDPR in Europe. The freedom with which businesses may employ AI and machine learning may be restricted by these restrictions, which impose limits on the collection, storage, processing, and use of data.

It might also be difficult to guarantee ongoing compliance when models change over time. Models drift, regulations change, and new data can necessitate fresh compliance evaluations. Large businesses can find it difficult to guarantee that their machine learning algorithms stay compliant over time without an appropriate compliance structure in place.

Large businesses can take the following actions to resolve regulatory compliance issues:

- Use compliance monitoring technologies to monitor data consumption, create behavioural models, and ensure that applicable regulations are being followed.

- Use explainable AI (XAI) techniques to make sure that models are comprehensible and that stakeholders and regulators can understand the reasoning behind choices.

- To make sure models continue to adhere to changing requirements, audit and update them on a regular basis.

- Work together with the legal and compliance departments to make sure AI initiatives meet the most recent legal specifications.

Cost Optimization

For big businesses, cost optimization is a major problem, especially when it comes to scaling up the deployment and upkeep of Machine Learning (ML) models. Managing the expenses related to data processing, model training, and deployment may easily become complicated and costly when businesses use MLOps methods.

Long-term sustainability in machine learning projects depends on the complexities of efficiently managing resources while striking a balance between cost and performance. Focusing on cost-efficient procedures becomes essential for major organizations, whose operations and resources are sometimes huge, in order to prevent overspending and to optimize the return on investment (ROI) for AI initiatives.

Addressing the Cost Optimization is as follows:

- Adopt a Hybrid MLOps approach: Big businesses may use a hybrid MLOps approach instead of depending just on on-premise infrastructure or cloud services.

- Optimise Data Pipeline Infrastructure: To cut expenses related to maintaining data processing infrastructure, businesses can implement serverless data processing services like AWS Lambda or Google Cloud Functions.

- Review and Optimise Resource Usage Frequently: To make sure that resources are being used efficiently, businesses must audit their MLOps workflows on a frequent basis.

How to Chose the Right MLOps Stack for Your Business

Below are key considerations to help guide you in choosing the right MLOps stack for your business.

Comprehend Your Business Needs and Growth Potential

Assessing your business demands and the scale at which you anticipate operating is the first step in choosing the best MLOps stack. Smaller businesses with less resources will want a solution that is affordable, user-friendly, and expandable.

Seek out products that are flexible enough that your team can utilise and integrate them with little initial outlay of funds. An MLOps stack that provides cloud-based solutions or lightweight choices may be perfect for small firms just getting started with machine learning since they can grow with your company without requiring a lot of infrastructure.

Evaluate Integration with Existing Systems

How effectively the MLOps stack integrates with your current infrastructure is another important consideration. Many companies already have processes in place, so switching to a new platform that doesn’t work well with them might cause problems or inefficiencies.

Selecting a stack that facilitates simple integration via APIs and pre-built connectors is crucial for businesses with outdated systems. This eliminates the need for intricate or expensive reconstruction and guarantees a smooth data flow between your MLOps system and other internal tools.

Give flexibility and scalability a priority

The MLOps stack should be able to grow with your company as it expands. Because of this adaptability, your machine-learning processes will continue to function effectively even as data volumes, model complexity, and processing demands rise.

Companies should seek out MLOps technologies that enable them to extend their infrastructure and capabilities without undergoing significant overhauls, particularly those with plans for future expansion. Particularly for small enterprises with limited initial resources, scalable systems that dynamically modify resources based on demand are crucial since they allow you to maximize expenses while expanding.

Pay attention to compliance, governance, and security.

Security, governance, and regulatory compliance should be given top priority when choosing an MLOps stack, particularly for companies operating in highly regulated industries like government, healthcare, or finance.

To prevent legal problems, it is essential to protect sensitive data and make sure that data privacy rules are followed. Choose an MLOps platform with strong security features like audit trails, access control, and data encryption. These tools will assist in making sure that sensitive data is only accessible by authorized persons and that all actions are recorded for auditing purposes.

Leading the data revolution: CDO role in today's organizations

The need for CDOs is expanding as a result of the growing significance of data in today’s corporate environment. Any organization’s success depends on the CDO, who is the primary force behind digital innovation and change.

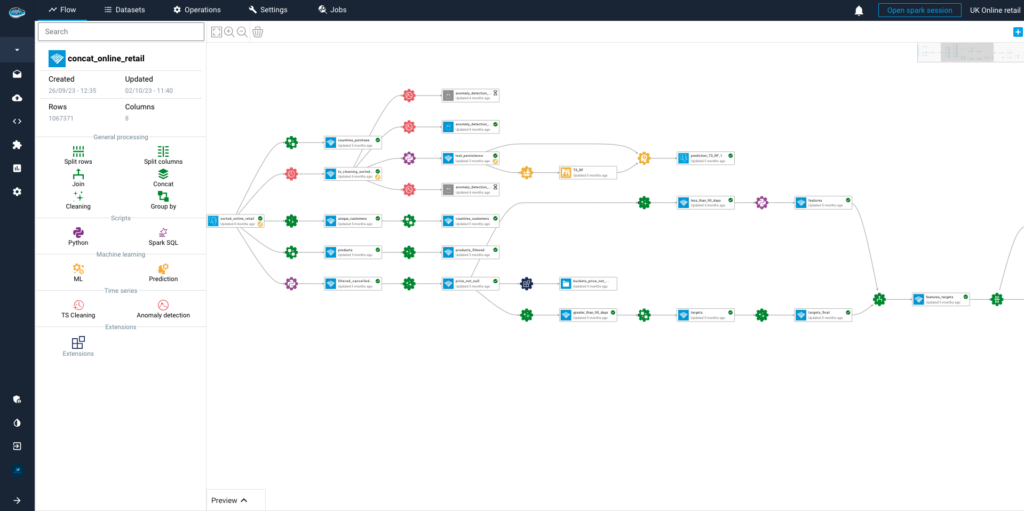

Streamlining MLOps with papAI: Key Benefits and Features

papAI is a scalable, modular, and automated AI platform designed for end-to-end ML lifecycle management, seamlessly integrating with existing infrastructures.

Businesses may use papAI solutions to industrialize and execute AI and data science projects. It is collaborative by nature and was created to support cooperation on a single platform. The platform’s interface makes it possible for teams to collaborate on challenging tasks.

Several machine learning approaches, model deployment choices, data exploration and visualization tools, data cleaning, and pre-processing capabilities are a few of these features.

Here’s an in-depth look at the key features and advantages of this innovative solution:

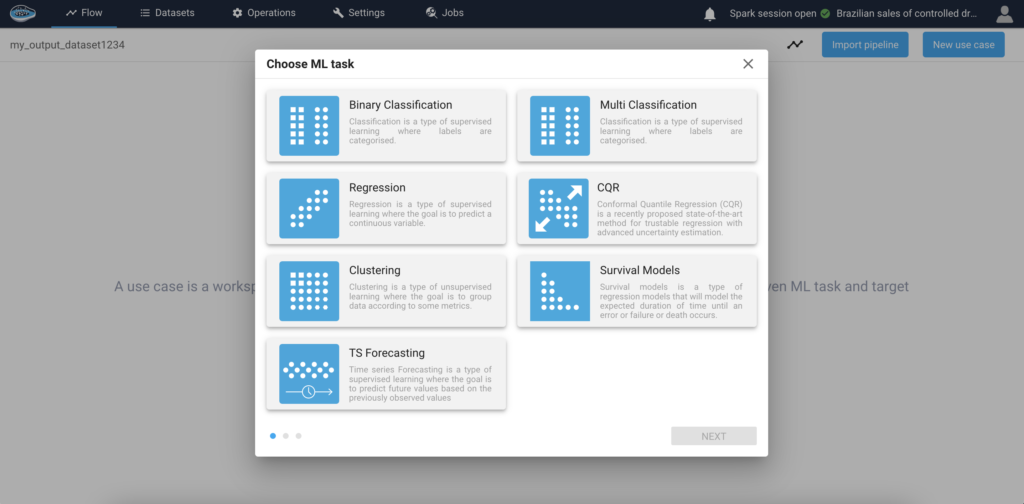

Simplified Model Implementation

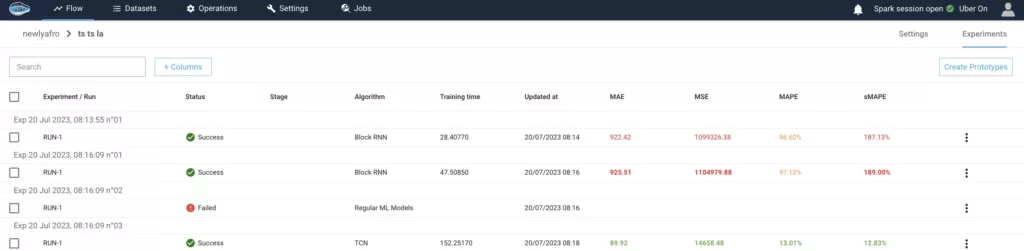

Using the Model Hub, the papAI Solution significantly streamlines the process of installing machine learning models. The Model Hub (Binary Classification, Regression model, Clustering, Ts Forecasting, etc.) is the primary source of pre-built, deployment-ready models from papAI. As a result, businesses no longer have to begin model development from scratch. According to our most recent survey, people who utilise the papAI solution often save 90% of their time when putting AI ideas into action.

Efficient Model Monitoring & Tracking

Organisations may monitor the most important model performance parameters in real time with the variety of monitoring tools offered by papAI Solution. Users may track the model’s performance and see any possible problems or deviations with the use of metrics like accuracy, precision, and other pertinent indications. High-quality outputs are ensured by this ongoing monitoring, which enables the proactive detection of any reduction in model performance. Up to 98% accuracy has been demonstrated for our clients.

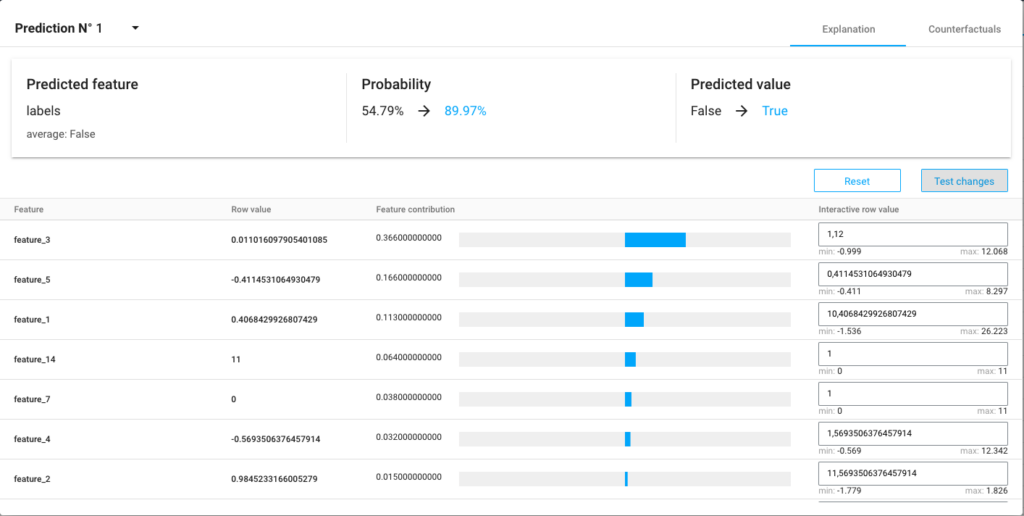

Enhanced Interpretability and Explainability of the Model

Deep learning and sophisticated machine learning models have historically been viewed as “black boxes,” making it difficult to comprehend how they generate predictions. The papAI solution addresses this problem directly by using cutting-edge model explainability approaches. It offers insights into the inner workings of the models and draws attention to the key elements and characteristics that affect forecasts. Thanks to papAI, stakeholders can now observe exactly how AI models make decisions, solving the conundrum of how they forecast the future.

What Makes papAI Unique?

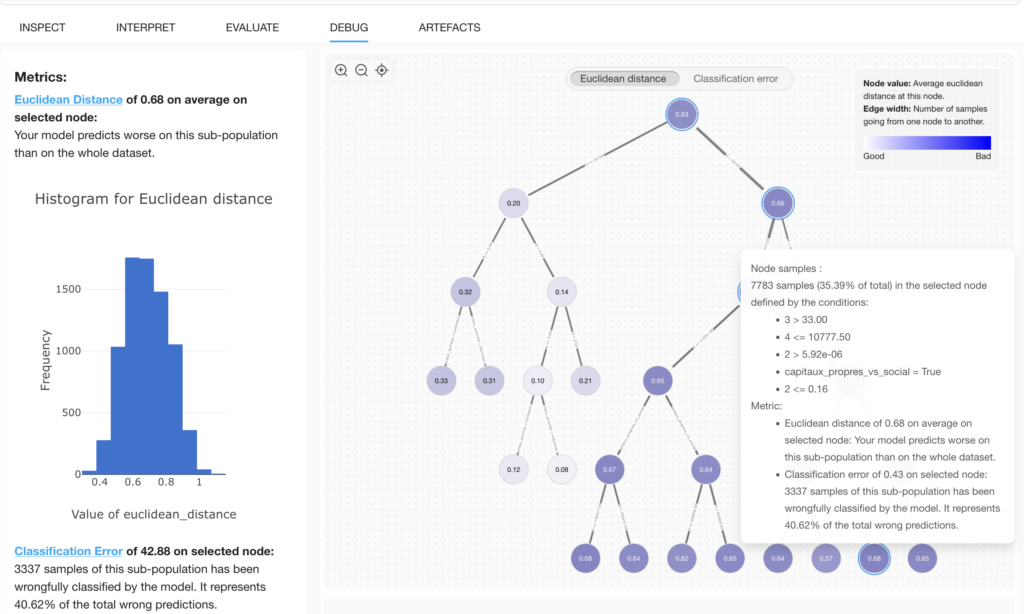

Error Analysis Tree for fairness and transparency

In papAI, an Error Analysis Tree for fairness and transparency is an advanced tool designed to automatically identify and understand model errors, going beyond traditional bias detection. It dissects model performance by segmenting the data into subgroups and pinpointing areas of high error, enabling businesses to uncover hidden issues in a model’s predictions.

This analysis tool ensures that fairness and transparency are prioritized by not only assessing model performance across various groups but also identifying specific instances where the model underperforms, making it easier to address issues systematically.

- Beyond Equity measures: The Error Analysis Tree isn’t limited to merely equity-related issues, in contrast to traditional bias analysis, which usually concentrates on equity measures, which assess whether the model treats particular groups unjustly (for example, based on gender, ethnicity, or socioeconomic status). It offers a more comprehensive perspective, looking at performance across a range of data attributes rather than simply pre-established protected groups.

- Root Cause Analysis Without Predefined Groups: Unlike bias analysis tools that require users to define protected groups (such as specific demographic or identity groups) beforehand, the Error Analysis Tree autonomously explores the data to discover and highlight the real sources of errors. It offers a deeper, data-driven exploration of where and why the model fails, providing more accurate insights into underlying problems.

- Performance Improvement Focus: While bias analysis is often focused on measuring discrepancies between groups, error analysis is more performance-oriented. The goal of error analysis is not merely to detect whether disparities exist between groups, but to identify specific areas where the model is weak or inaccurate. This facilitates targeted improvements to the model, making it more effective and fair across all subgroups.

Leverage papAI's MlOps Capabilities for Superior Model Performance

Learn how the papAI solution makes it easier to deploy and manage machine learning models so you can easily realize their full potential.

During the demo, our AI expert will walk you through papAI’s sophisticated features, simplified workflow, and integration to ensure you fully grasp its potential. Use papAI to transform your AI company’s strategy.

or Activate your free session today and see how our AI-driven platform can elevate your MLOps transformation journey,

MLOps, or Machine Learning Operations, is a discipline that ensures effective deployment, monitoring, and management of machine learning models in production. It integrates data scientists and IT professionals, automating the machine learning lifecycle. MLOps is critical for organizations as it streamlines the entire process, from data preparation and model training to deployment and performance monitoring, ensuring that models remain accurate, relevant, and scalable over time.

Small businesses often struggle with hiring qualified AI talent, managing limited resources, and scaling their AI initiatives. The high demand for skilled professionals, such as MLOps engineers, combined with small budgets and fewer opportunities for growth, makes it difficult to retain top talent.

Large enterprises face complex data management issues due to the volume, variety, and distribution of data across various systems. They also struggle with organizational silos that impede cross-functional collaboration and slow down AI adoption. Additionally, ensuring compliance with strict regulations and managing costs associated with large-scale AI deployments are significant challenges.

When selecting an MLOps stack, businesses should prioritize flexibility, scalability, integration with existing systems, and compliance with security and regulatory standards. For small businesses, cloud-based solutions with minimal upfront investment and scalability are crucial. Larger organizations must ensure that the stack integrates seamlessly with current infrastructure and can handle growing data volumes and complexity.

Interested in discovering papAI?

Watch our platform in action now

How RAG Systems Improve Public Sector Management

How RAG Systems Improve Public Sector Management The most important...

Read MoreScaling RAG Systems in Financial Organizations

Scaling RAG Systems in Financial Organizations Artificial intelligence has emerged...

Read MoreHow AgenticAI is Transforming Sales and Marketing Strategies

How AgenticAI is Transforming Sales and Marketing Strategies Agentic AI...

Read More